Organizations are looking to build smaller applications (with lesser code) to perform a specific function. With the emergence of data-driven applications, organizations face challenges in integrating complex data from multiple sources. Large-scale data processing requires real-time data importing and processing techniques. This is where data pipelines play a crucial role.

Effectively, data pipelines can compile and analyze large and complex data sets with functions that can convert raw data into valuable information.

What is a Data Pipeline?

By definition, a data pipeline is a process that moves data from its source to its destination, which could be a data lake or warehouse. These use a set of functions, tools, and techniques to process and convert the raw data. This process comprises multiple steps, including:

- The data source (or where the data originates).

- Data processing – the step that ingests data from its source, transforms it and then stores it in the data repository.

- The destination for storing the data.

Although these steps sound simple, data pipeline architectures exhibit complexity due to the massive volume and complexity of the raw data.

In recent times, data pipelines have evolved to support Big Data and its three primary traits – velocity, volume, and variety. Hence, data pipelines must support:

- Velocity of Big Data: Capture and process data in real time.

- Volume of Big Data: Scale up to accommodate real-time data spikes.

- Variety of Big Data: Quickly identify and process structured, unstructured, and hybrid data.

Next, let us examine the various parts of a data pipeline.

SUGGESTED READ - Making Test Automation efficient with Data-driven Testing

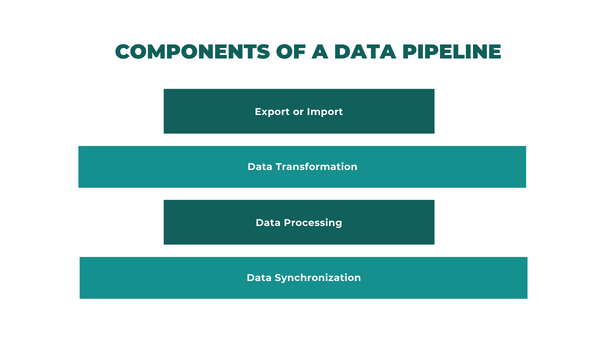

Components of a Data Pipeline

Although data pipelines can differ based on usage, listed below are its common parts or components:

1. Export or Import

This component covers the data input process (from its source). Entry points typically include data (or IoT) sensors, processing applications, social media inputs, and APIs. Furthermore, data pipelines can extract information from data pools and storage systems.

2. Data Transformation

This component includes all the data changes (or transformations) as it moves across systems. Data changes in accordance with the data format supported by the target application (for example, data analytics).

3. Data Processing

This component involves the tasks and functions required to import, extract, convert, and upload the data to its target output. Data processing also includes tasks like data merging and sorting.

4. Data Synchronization

This final component is all about synchronizing data between all supported data sources and the endpoints of the data pipeline. This component involves maintaining data consistency (through the pipeline lifecycle) by updating the data libraries.

Next, let us discuss the benefits (or advantages) of implementing a data pipeline.

Advantages of Data Pipeline

A well-designed and efficient data pipeline is reusable for new data flow, thus improving the efficiency of the data infrastructure. They can also improve the visibility of the data application, including the data flow and deployed techniques. Data professionals can configure the telemetry of the data across the pipeline, thus enabling continuous monitoring.

Moreover, they can improve the shared understanding of all the data processing operations. This enables data teams to explore and organize new data sources while decreasing the cost and time of adding new data streams. With improved visibility, organizations can extract accurate data, thus enhancing the data quality.

What are the crucial design considerations when implementing data pipelines? Let’s discuss that next.

Ready to Get Started?

Let our team experts walk you through how ACCELQ can assist you in achieving a true continuous testing automation

Considerations of Data Pipeline

To implement a successful data pipeline, development teams must address multiple considerations in the form of the following questions:

- Does the pipeline need to process streaming data?

- What is the type and volume of processed data required for the business need?

- Is the Big Data generated on-premises or in the cloud?

- Which are the underlying technologies used for implementation?

Here are some preliminary considerations to factor during design:

- Data origin or source is understood and shared among all stakeholders. This can minimize inconsistencies and work around assumptions about the data structure.

- The data type is an important aspect of the pipeline design. Data type typically comprises event-based data and entity data. Event-based data is denormalized and describes time-based actions. Entity data is normalized and describes a data entity at the current time point.

- Data timeliness is the rate at which data must be collected from its production system. Data pipelines can vary widely based on the speed of data ingestion and processing.

Finally, let us consider the best examples of data pipelines.

The Examples

Depending on the business need, data pipeline architectures can vary in design. For example, a batch-driven pipeline is deployed for point-of-sale (POS) systems as they generate arge data points. Further, a streaming pipeline processes real-time data from POS systems and feeds the output to data stores and

Another example is the Lambda architecture, which combines both batch and streaming pipelines within a single architecture. This architecture is widely deployed for processing Big Data.

Here are some of the real-life case studies:

- HomeServe uses a streaming pipeline to move data to Google BigQuery. This optimizes the machine learning data model used in the leak detection device, LeakBot.

- Macy’s retail store changes streaming data from its on-premises database to the Google Cloud. This provides a unified shopping experience for its online or in-store customers.

Conclusion

With the growing volume and complexity of data, organizations must modernize their data pipelines to continue extracting valuable insights. As outlined in this article, data pipelines offer multiple business benefits to data-dependent organizations.

ACCELQ offers a codeless approach to data-based automation for the benefit of its global customers. Our technology solutions range from enterprise automation to outcome-based services.

Looking to improve the effectiveness of your data pipeline? We can help you out. Sign up today for a product demo!

Related Posts

Software Testing Trends to Look Out For in 2025

Software Testing Trends to Look Out For in 2025

Software Testing Trends to Look Out For in 2025

Master Test Case Writing for Better QA Outcomes

Master Test Case Writing for Better QA Outcomes