How AI Application Security Testing Transforms App Protection?

Every business runs on applications. They handle data, connect users, and drive daily operations. That makes them a prime target for attackers. The problem is, most security testing still relies on older methods that simply can’t keep pace.

AI application security testing offers a way forward. It looks at massive sets of data, spots weak points faster, and learns from every scan. Instead of chasing threats after they happen, it helps teams predict and prevent them.

Let’s look at what slows traditional testing down, how AI improves SAST/DAST/IAST, and which approaches actually work in practice.

- The Gaps in Traditional Security Testing

- How AI Improves SAST/DAST/IAST?

- Benefits and Challenges of AI in App Security Testing

- Techniques That Make AI Testing Work

- How to Secure AI-Based Applications?

- AI App Security Testing Tools to Evaluate

- Frameworks That Guide AI Security

- How AI Improves Application Security

- Conclusion

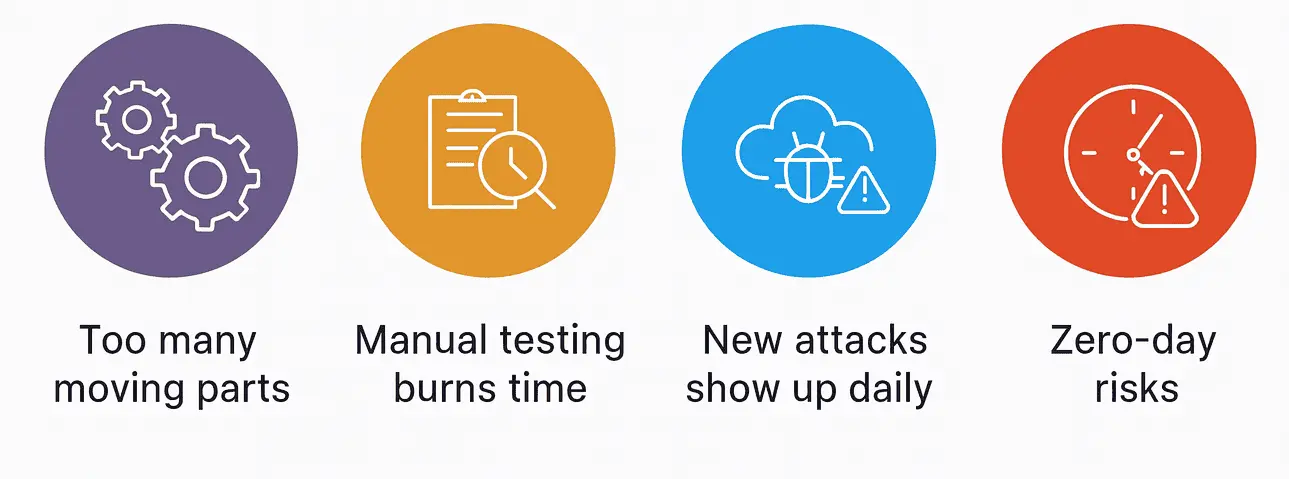

The Gaps in Traditional Security Testing

Manual and static testing methods worked when applications were smaller. Now they run across multiple services, clouds, and APIs. That complexity introduces problems:

1. Too many moving parts.

Modern apps combine APIs, microservices, and third-party modules. Testing each piece separately leaves blind spots attackers can exploit.

2. Manual testing burns time.

Human testers can’t possibly check thousands of updates or configuration changes each sprint. Even good teams miss small issues that turn into serious flaws.

3. New attacks show up daily.

Threats evolve faster than static scanners can adapt. Once you fix one hole, two more appear somewhere else.

4. Zero-day risks.

Some vulnerabilities are unknown until they’re used in an attack. Traditional testing reacts after the damage is done.

How AI Improves SAST/DAST/IAST?

Think of AI as an assistant that connects the dots across your testing layers.

| Type | Traditional Focus | How AI Improves It |

|---|---|---|

| SAST | Scans code for flaws before release. | Learns from previous findings and reduces false alarms. |

| DAST | Tests running applications for vulnerabilities. | Detects unusual behavior in real time and flags likely exploits. |

| IAST | Combines both static and dynamic testing views. | Correlates results and ranks vulnerabilities by real impact. |

In short, AI application security testing doesn’t replace these methods, it makes them work together and adapt faster to new threats.

📘 Want to Deep-Dive into AI’s Role in Testing?

Explore our in-depth whitepaper on how AI is reshaping the future of software testing – from predictive insights to autonomous validation.

👉 Download the Whitepaper: AI in Testing

Benefits and challenges of AI in App security testing

No technology is perfect. Here’s a quick look at the benefits and challenges of AI in App security testing, and what teams can do to manage them effectively.

| Benefits | What It Means |

|---|---|

| Faster detection | Security checks happen automatically and continuously. |

| Better accuracy | The system learns from previous results and fine-tunes over time. |

| Continuous protection | Risks are found during development, not after release. |

| Clear priorities | Teams focus on critical issues first. |

| Lower cost | Less repetitive manual work and reduced remediation effort. |

These challenges don’t make AI less valuable; they just remind teams to stay proactive by refining models, validating results, and ensuring smooth tool integration.

| Challenges | How to Handle Them |

|---|---|

| Models can drift | Keep retraining AI models with current threat and operational data to maintain accuracy. |

| Hard to explain results | Adopt systems that provide explainable AI (XAI) insights to clarify reasoning behind alerts or predictions. |

| False positives | Cross-verify AI-generated results through expert validation or secondary heuristic analysis. |

| Integration friction | Use continuous integration tools that connect seamlessly with your existing DevOps pipeline. |

Techniques That Make AI Testing Work

Machine Learning

AI models compare patterns across millions of lines of code. Over time, they start spotting subtle issues early, things a standard scanner would miss.

Natural Language Processing

A lot of security clues hide in documentation, configs, or logs. NLP test automation can read that text and find gaps between how things should work and how they actually do.

Continuous Testing

With AI application security testing integrated into your CI/CD pipeline, every code change is checked automatically. That turns security from a one-time activity into a constant feedback loop.

How to Secure AI-Based Applications?

When your product itself uses AI, the attack surface changes. Protecting it means watching the model and its data as closely as the code.

Here’s how to secure AI applications effectively:

- Clean and validate all data inputs.

- Build threat models that include AI-specific risks like poisoning and model theft.

- Test for prompt injection or adversarial behavior.

- Monitor output drift and irregular patterns.

- Follow standards such as NIST AI RMF and OWASP Top 10 for LLMs.

SUGGESTED READ - Risk-Based Testing with LLMs

AI App security testing tools to evaluate

Some platforms now blend automation, AI, and security into a single workflow. Here are a few examples of AI App security testing tools to evaluate:

- ACCELQ – Uses AI-driven automation and real-time scanning across web, API, and mobile layers. It integrates directly with CI/CD and adapts testing as the app evolves.

- Synopsys – Known for deep code and dependency analysis.

Checkmarx One – Applies AI to prioritize vulnerabilities by likelihood of exploit. - Veracode – Cloud-based testing with policy enforcement.

- Contrast Security – Focuses on real-time detection during runtime.

Top-rated Solutions for Secure AI Applications in Distributed Cloud

Platforms like ACCELQ Autopilot extend scanning and governance across cloud regions. They unify results, enforce compliance, and minimize duplicated effort, making them some of the top-rated solutions for secure AI applications in distributed cloud environments.

Frameworks That Guide AI Security

To keep testing accountable and explainable, organizations rely on trusted AI security frameworks such as:

- NIST AI Risk Management Framework

- MITRE ATLAS threat mapping for AI systems

- OWASP guidelines for AI

- ISO/IEC 42001 for AI management practices

They bring structure to how teams assess and track AI-driven risks.

How to Secure AI Applications & Improve Application Security?

AI isn’t magic. It’s a practical way to automate pattern detection, predict weaknesses, and connect context across systems. In real use, it helps teams:

- Find flaws faster

- Spot trends before they become incidents

- Cut noise from false alarms

- Produce clear, actionable reports

The result is more focus on fixing what matters, not drowning in scan data. That’s how AI improves application security testing across industries.

Future-Proof Your QA Automation

Explore AI-powered platform and enterprise-level quality.

Get Started

Conclusion

AI application security testing has moved from “nice to have” to essential. It speeds up detection, cuts manual effort, and adapts as threats change.

ACCELQ brings this intelligence into everyday testing. Teams using it have reported faster automation cycles, major cost reductions, and simpler maintenance. If you want to see how it fits into your workflow, book a demo and test it for yourself.

Geosley Andrades

Director, Product Evangelist at ACCELQ

Geosley is a Test Automation Evangelist and Community builder at ACCELQ. Being passionate about continuous learning, Geosley helps ACCELQ with innovative solutions to transform test automation to be simpler, more reliable, and sustainable for the real world.

You Might Also Like:

Supercharge Testing with AI: Automate & Accelerate Efficiency

Supercharge Testing with AI: Automate & Accelerate Efficiency

Supercharge Testing with AI: Automate & Accelerate Efficiency

Gen AI Use Cases: Practical Applications and Enterprise Framework

Gen AI Use Cases: Practical Applications and Enterprise Framework

Gen AI Use Cases: Practical Applications and Enterprise Framework

Agentic AI vs Generative AI: Understanding the Future of AI

Agentic AI vs Generative AI: Understanding the Future of AI