Testing Generative AI Systems: Navigating New Challenges

Generative AI systems represent a monumental shift in how businesses can tackle challenges and automate solving them. These “generative models” can create content, make decisions, and may even help humans become more creative. But with innovation comes complexity, especially when it comes to testing generative AI systems.

In contrast to typical software, generative AI is built on vast amounts of data and probabilistic models, presenting extraordinary challenges in terms of accuracy, consistency, quality, and practical application in the real world. From identifying hallucinations in generated content to ethical implications and model reliability, testing these systems will require a new way of thinking and an updated approach.

What Makes Generative AI Systems Hard to Test?

Testing generative AI systems differs significantly from how we typically test other types of software. What is the cause behind this, you might ask? The answer is that AI algorithms, and generative ones in particular, are simply probabilistic. While traditional software operates under certain deterministic rules, generative AI models generate outputs based on huge and unpredictable datasets. This introduces complexity around repeatability, correctness, and scalability of tests.

Yet another specialized GenAI testing challenge comes from the lifelong learning nature of the majority of generative AI systems. They may change over time; therefore, when we deploy a model, it may not behave as expected or may differ from what we designed when it is used in the real world. Furthermore, the models could exhibit unintended behavior due to the wide range of inputs, so it would be essential to monitor and test them iteratively.

How to Test Generative AI models and GenAI testing challenges

Testing generative AI systems involves overcoming several core GenAI testing challenges:

- Quality and Variability of Data: Good training data is essential for obtaining accurate outputs from a model. A lack of varied, high-quality data for the model can result in the generation of inaccurate or irrelevant information, potentially destroying the entire application it serves.

- Non-fixed Outputs: Generative artificial intelligence models in natural language generation and image synthesis do not output the same result when provided with the same input. This makes it difficult to test traditionally, comparing actual vs. expected results.

- How to detect Hallucinations in Generative AI Models: Hallucinations are among the most acute problems in generative AI. They are incorrect or nonsensical replies that the AI can produce and that can lead users astray or corrupt automated activities.

- Bias and Ethical Issues: Generative AI models may inadvertently replicate biases present in their training data. Detection and remediation of such biases require a systematic approach to testing beyond construction verification.

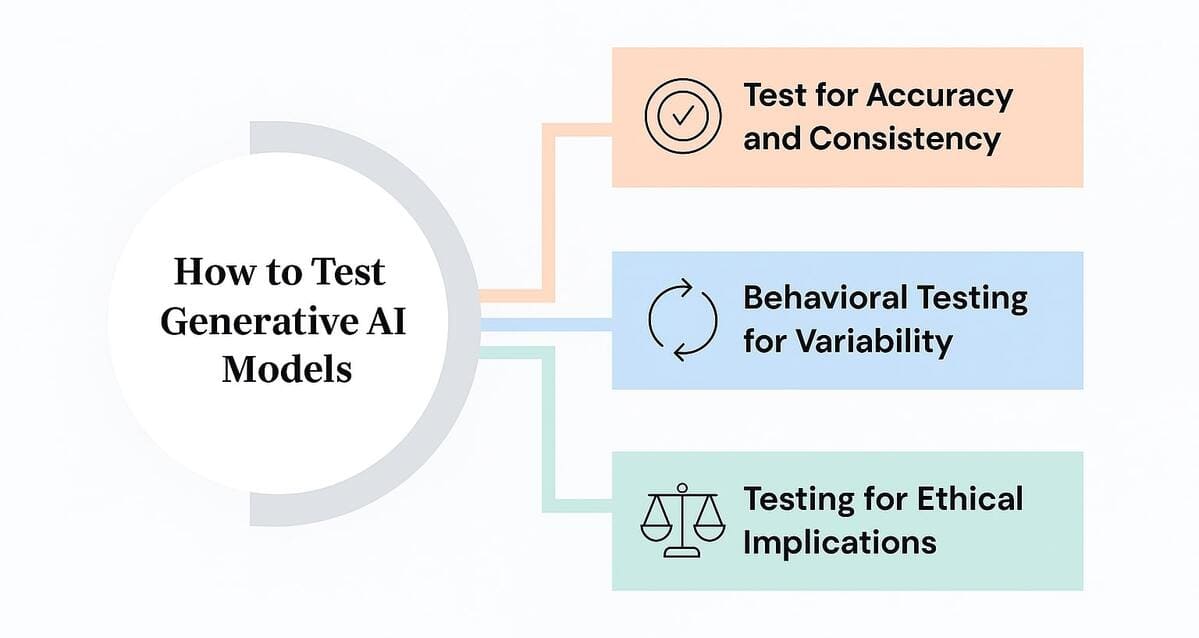

How to Test Generative AI Models?

To test generative AI models effectively, a different approach is required compared to traditional software testing. The following sections outline some of the key steps in testing these systems.

1. Test for Accuracy and Consistency

Although generative AI does not have fixed outputs, it should be possible to compare the generation with the outputs to assess its consistency and accuracy, and with the support for the observation. This may be achieved by testing generative AI models with an assessment of whether they generate valid and useful content, using predefined metrics, such as coherence, relevance and factual correctness.

2. Behavioral Testing for Variability

Due to the inherent variability in AI outputs, it is essential to implement tests that assess the model’s behavior across a broad range of inputs. This could involve stress testing with inputs designed to probe the edges of the model’s knowledge base and capability.

3. Testing for Ethical Implications

With the growing concerns about AI ethics, it’s crucial to test for potential biases in AI models. This can involve applying fairness audits and checking for biases across different demographic groups to ensure the AI produces ethical and fair outcomes.

Accelerate QA with Generative AI in Software Testing illustrates how AI can optimize testing practices.

Detecting Hallucinations in Generative AI Models

One of the most challenging aspects of testing generative AI is detecting hallucinations, when the AI model generates an output that is entirely fake or false. Hallucinations can cause a lot more trouble in application settings, such as builder bots or decision assistance, than just odd samples.

Learn more about the Top 10 Generative AI Testing Tools You Need to Watch in 2025 to enhance your testing processes.

Accuracy vs Hallucination

If one wanted to detect hallucinations in generative AI models, it would be desirable to decide what the correctness of generative AI means. Unlike typical software systems, the accuracy of outputs of generative AI has to be evaluated based on their appropriateness within a context and correctness with respect to facts. Hallucination detection. The common method to detect hallucinations consists of the following techniques:

- Human-in-the-loop review: Where experts review content generated by AI systems for accuracy.

- Automated Fact-checking Tools: Fact-checking tools that allow for cross-checking AI-generated text against trusted sources of information for accuracy.

- Output Validation: Developing system-level grounding for cross-referencing the legitimacy and validity of the outputs generated by AI across a similar sequence of inputs.

Discover the Best AI Testing Frameworks for Smarter Automation in 2025 to Enhance Your Testing Strategy.

Tools and Metrics for Detecting Hallucinations

There are various methods for detecting and mitigating hallucinations in generative AI models. There are a few really good possibilities, though:

- Natural Language Understanding (NLU) Models: They are capable of detecting anomalies or inconsistencies in an AI-generated text by being compared with factual databases or trained knowledge.

- Automated Evaluation Metrics: BLEU, ROUGE and F1 scores are metrics that can be adjusted to measure the quality and correctness of the generated content.

Building a Generative AI Testing Strategy

Businesses will need to adopt a fluid and iterative approach when implementing an effective testing strategy for working with generative AI models. What are the best practices for testing GenAI systems that can help make the implementation of such a solution successful?

Testing GenAI Systems: A Best Practice Guide

- Test Continuously: As generative AI solutions grow, being able to test and test and test them again is necessary. By setting up a feedback loop and quality monitor, you provide some guarantees that your evolving model stays true to the goals you want to reach.

- Define Clear Metrics: Clearly define what you want AI outputs to achieve. These might include coherence and relevance to factual truth, to name just a few metrics and ethical conundrums. By looking at these metrics, testers to easily assess if the AI model is up to the mark.

- Test for Edge Cases: Generative AI models tend to fall to the extremes, such as inputs that are rare or out of the ordinary. It is very important that such edge cases are tested, to make sure the AI system is robust and impervious in real-world conditions.

- Incorporate Ethical Guidelines: You should test for your ethical rules, such as bias or discrimination, or fairness. In this way, companies will maintain their AI models to operate within ethically accepted boundaries and avoid unintended repercussions.

Discover how Gen AI integrates with Agile DevOps for continuous testing in the software lifecycle.

Final Thoughts

Testing generative AI systems needs to shift its paradigm towards a testing strategy based on ongoing evaluation, consideration of ethical questions, and the detection of anomalies (e.g., hallucinations). With a combination of proactive thinking and best practices, our organizations can place the trust in generative AI systems that they deserve —to be reliable, scalable, and ethical.

As AI continues to become more integrated into business applications, a platform like ACCELQ can help teams create more effective testing. ACCELQ’s AI-driven test automation can handle the intricate demands of generative AI systems, while also offering advanced testing capabilities that increase productivity, eliminate manual effort, and elevate the quality of AI-powered applications.

Geosley Andrades

Director, Product Evangelist at ACCELQ

Geosley is a Test Automation Evangelist and Community builder at ACCELQ. Being passionate about continuous learning, Geosley helps ACCELQ with innovative solutions to transform test automation to be simpler, more reliable, and sustainable for the real world.

You Might Also Like:

AI-Powered Root Cause Analysis for Better Testing Outcomes

AI-Powered Root Cause Analysis for Better Testing Outcomes

AI-Powered Root Cause Analysis for Better Testing Outcomes

Designing AI Agents Workflow for Test Automation

Designing AI Agents Workflow for Test Automation

Designing AI Agents Workflow for Test Automation

AI-Driven Test Case Management for Maximizing Benefits

AI-Driven Test Case Management for Maximizing Benefits