Designing Agent Workflows for Test Automation: Patterns, Prompts, Guardrails

AI Agents Workflow Automation is redefining how test automation works. Traditional scripts follow a list of steps. AI agents, on the other hand, plan, adapt, and collaborate. They do not just run tests. They make decisions, manage context, and coordinate different parts of the testing lifecycle.

In simple terms, AI agent’s workflow automation uses intelligent agents to handle everything from test generation to execution and reporting. Instead of executing a fixed plan, these agents learn from feedback and adjust their actions as they go.

This approach, often called agentic AI workflow automation, takes automation beyond scripted repetition. It introduces reasoning, autonomy, and coordination into testing. Agents act as orchestrators, connecting various tools, APIs, and data systems to create an adaptive testing ecosystem.

- Agents as Orchestrators of Test Automation

- Core Concepts: Tasks, Agents, and Workflow Orchestration

- Workflow Patterns for AI Testing Agents

- Prompt Engineering and Specification for Agents

- Guardrails, Validation, and Safety Mechanisms

- State and Memory Management in Agent Chains

- Error Handling, Rollbacks, and Recovery Modes

- Optimization and Performance Considerations

- Example: Agent Workflow Architecture for QA

- Governance and Best Practices for AI Agent Workflows

- Benefits of AI Agents Workflow Automation in Testing

- How AI Agent Workflow Automation Works in Practice?

- Conclusion

Agents as Orchestrators of Test Automation

Most QA teams still rely on frameworks driven by scripts or static copilots. They can execute tests but rarely adapt when systems change or when context is missing.

That is where best AI agents for workflow automation change the picture. These agents can read a requirement, create test cases, execute them, and validate the results while managing dependencies along the way. They operate with awareness, sharing memory and roles within a broader workflow.

An AI agent is not a simple prompt or LLM call. It carries context, has a defined role, and can interact with other agents. In large test environments, agents act like a team. One focuses on UI testing, another manages APIs, while a third validates data consistency. Together, they coordinate through structured communication instead of rigid scripts.

This shift marks a move from automation to orchestration. Agents act as intelligent conductors ensuring that every part of the testing process stays in sync.

SUGGESTED READ - Role of Test Orchestration in the Test Automation Process

Core Concepts: Tasks, Agents, and Workflow Orchestration

For agent workflow orchestration in QA to work, a few core principles must align.

- Task decomposition: Every goal is broken down into smaller, manageable tasks.

- Chaining: One agent’s output becomes another’s input to maintain context.

- Parallelization: Independent agents run simultaneously to speed up test cycles.

- State management: Agents track what has been done and what remains, preventing confusion.

- Prompt encapsulation: Each agent receives instructions, input types, and output formats that define its boundaries.

These principles turn isolated automation scripts into an interconnected system that collaborates like a human testing team.

Workflow Patterns for AI Testing Agents

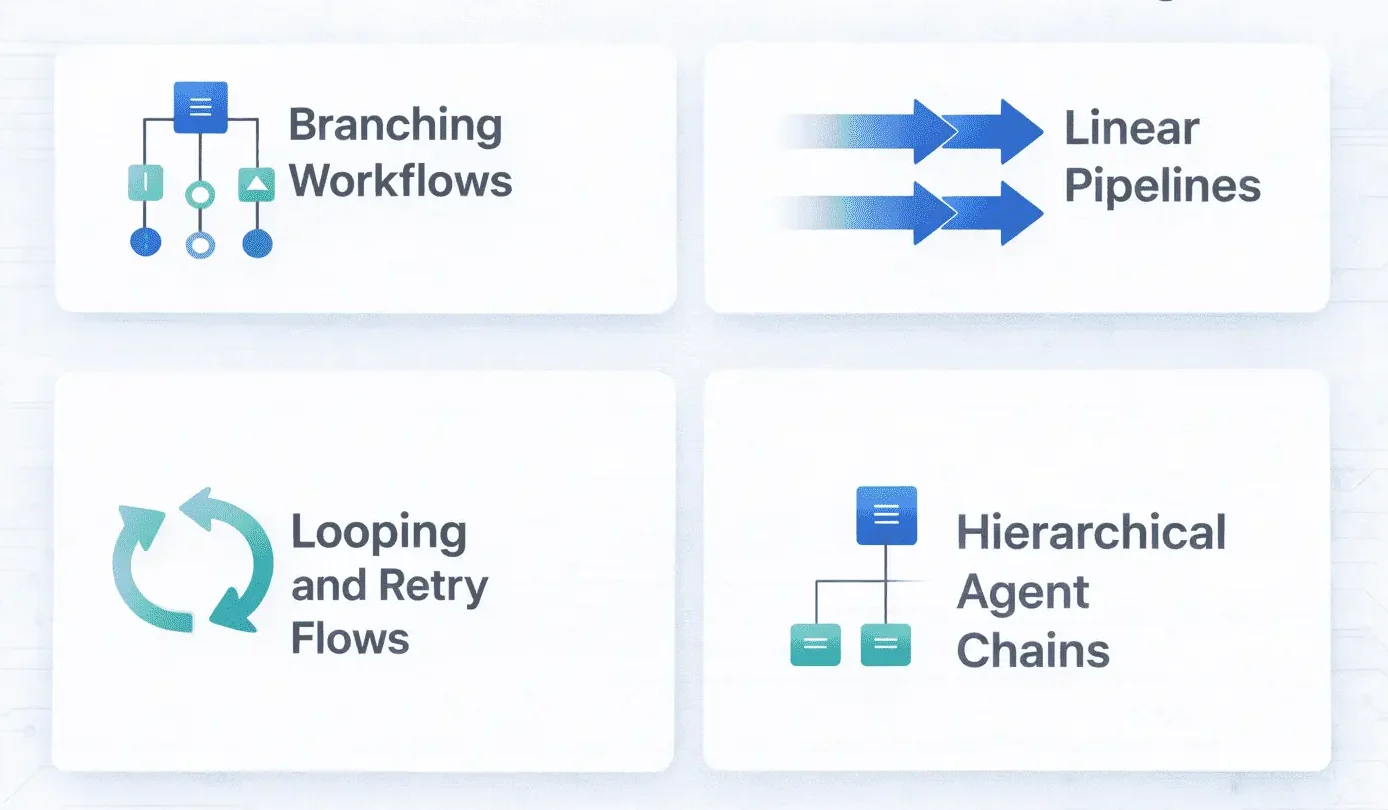

When designing workflow patterns for AI testing agents, structure determines reliability and maintainability. The most effective patterns include:

- Branching workflows: Conditional logic paths that react to environment issues, failed validations, or timeouts.

- Linear pipelines: A straight sequence that moves from specification to generation, execution, and reporting.

- Looping and retry flows: Used to revalidate dynamic behaviors such as load and performance.

- Hierarchical agent chains: A central agent supervises specialized agents for UI, API, and database validation.

These workflow patterns for AI agents in testing ensure flexibility. If one agent fails, others can recover or rerun their steps without collapsing the entire flow.

Dive deeper into AI-powered QA workflows!

Download our white-paper on AI in Testing and explore how agent-based automation can transform your testing strategy.

Prompt Engineering and Specification for Agents

Agents rely on prompts that define their purpose, scope, and limits. Designing these prompts is both an art and a science.

- Task prompt templates: Reusable blueprints for common testing actions such as regression validation or data setup.

- Dynamic prompts: Contextual instructions that change based on test state or environment.

- Role definition: Assigning clear responsibilities like “UI Validator” or “API Monitor.”

- Input-output schemas: Ensuring agents communicate with predictable formats.

- Prompt chaining: Passing results or memory between agents to preserve continuity.

Good prompt design gives agents clarity and consistency, preventing unnecessary loops or incorrect reasoning during testing.

Guardrails, Validation, and Safety Mechanisms

Autonomy requires control. Guardrails ensure that agents act within safe and defined boundaries.

- Output validation: Every agent output is checked against a schema or expected format before being used downstream.

- Confidence thresholds: Agents should act only when their certainty crosses a predefined score. Otherwise, they flag the issue for review.

- Execution limits: Setting boundaries for time, steps, and retries prevents infinite loops.

- Sandbox testing: New agent behaviors are tested in isolated environments before being promoted to production workflows.

These guardrails keep AI agents in test automation framework predictable, traceable, and auditable.

State and Memory Management in Agent Chains

State and memory define how well agents collaborate. When one agent finishes, it must hand off the right data and context to the next.

Shared memory allows agents to remember test parameters, execution history, and decisions. Logging every step creates transparency and traceability. In long-running workflows, state resets prevent confusion or drift caused by outdated information.

By combining memory persistence with controlled resets, teams maintain reliable agent chains that learn continuously but never lose alignment.

Error Handling, Rollbacks, and Recovery Modes

Failures are inevitable, but intelligent handling prevents disruption.

- Exception catching agents: Specialized agents monitor the flow for unexpected conditions.

- Rollback sequences: Agents can undo partial actions when a later stage fails.

- Compensating actions: If a rollback is not possible, agents apply corrective steps to maintain integrity.

- Partial completion safeguards: Workflows record what was completed so re-execution resumes from that point.

These recovery mechanisms make AI-driven test case management workflows resilient and self-correcting rather than fragile and reactive.

Optimization and Performance Considerations

While AI agents increase adaptability, they also consume compute and API resources. Optimization is essential.

- Cache intermediate results so repeated prompts are not regenerated.

- Parallelize independent subflows wherever possible.

- Control costs by limiting high-token or API-expensive operations.

- Monitor and log agent performance metrics such as decision time, call frequency, and accuracy.

Performance monitoring is critical for scaling. As agents multiply, telemetry data helps identify which parts of the workflow are worth optimizing or simplifying.

Example: Agent Workflow Architecture for QA

To illustrate, consider a workflow that generates, executes, and verifies tests for a web application.

- Planning Agent: Reads user stories or requirements and identifies high-risk areas.

- Test Generation Agent: Creates test cases and stores them in a repository.

- Execution Agent: Triggers relevant tests across browsers, APIs, and data layers.

- Validation Agent: Compares results with expected outcomes and flags inconsistencies.

- Reporting Agent: Summarizes outcomes and updates dashboards or Slack channels.

Each agent communicates through structured prompts and data contracts. If the validation agent detects abnormal behavior, it can trigger a retry or request a new test case. This example represents one of the many examples of agentic workflow automation in testing that blend autonomy with control.

Governance and Best Practices for AI Agent Workflows

The larger the enterprise, the stronger the need for governance for AI agent automation. Teams must balance autonomy with oversight.

- Version control: Each workflow and prompt template should be versioned for traceability.

- Audit trails: Maintain detailed logs of agent decisions and data handoffs.

- Human-in-loop checkpoints: Review sensitive or high-impact decisions.

- Incremental rollout: Introduce new agent behaviors gradually and monitor impact.

- Transparency: Ensure every automated decision can be explained and reviewed.

When evaluating AI agents for test automation, focus on their reasoning accuracy, error-handling maturity, and ability to integrate with your existing QA ecosystem.

These are also part of the best practices for AI agent workflows, ensuring safety, scalability, and predictability.

Benefits of AI Agents Workflow Automation in Testing

Well-structured agent workflows bring measurable gains.

- Higher accuracy through continuous self-validation.

- Reduced test maintenance with modular task ownership.

- Improved test coverage through intelligent scenario generation.

- Faster release cycles due to autonomous orchestration.

- Lower human intervention and better resource efficiency.

The benefits of AI agent workflow automation in testing become evident as teams move from static automation to adaptive intelligence.

Agentic Automation That Learns and Innovates

Redefine Your Testing Game with AUTOPILOT

Step into the future

How AI Agent Workflow Automation Works in Practice?

Here is a simplified overview of how AI agent workflow automation works:

- Define the overall test objective and environment parameters.

- Decompose the goal into modular tasks assigned to specific agents.

- Configure prompts, input-output schemas, and validation logic.

- Execute the workflow with monitoring and feedback loops.

- Review results, adjust prompts, and iterate for improved accuracy.

These steps to build an AI agent workflow in test automation form the foundation of continuous, intelligent QA orchestration.

Conclusion

AI agents are changing how testing teams think about automation. Instead of writing fixed scripts, teams can now design intelligent systems that plan their own actions, make informed decisions, and collaborate with other agents to complete complex tasks. The challenge is not to hand everything over to automation but to design it thoughtfully so that control and adaptability stay in balance.

When you design workflows with clear patterns, defined prompts, and built-in guardrails, you give agents the structure they need to perform reliably. Memory, context, and state management make them consistent. Governance and transparency make them trustworthy.

ACCELQ provides the real value of AI agents workflow automation lies in how it helps QA teams move faster without losing quality. It is not about replacing people. It is about creating smarter systems that work alongside them. The future of testing belongs to teams that can build these agentic systems with intent, measure their outcomes, and keep improving how machines and humans collaborate.

Ready to redefine your QA workflow?

Contact our team and let’s explore how AI-driven agent workflows can elevate your test automation.

Ready to elevate your real device testing?

Request a demo of ACCELQ and see how you can drive accurate results across devices.

Geosley Andrades

Director, Product Evangelist at ACCELQ

Geosley is a Test Automation Evangelist and Community builder at ACCELQ. Being passionate about continuous learning, Geosley helps ACCELQ with innovative solutions to transform test automation to be simpler, more reliable, and sustainable for the real world.

You Might Also Like:

ChatGPT for Mobile Testing – What It Actually Solves in 2026?

ChatGPT for Mobile Testing – What It Actually Solves in 2026?

ChatGPT for Mobile Testing – What It Actually Solves in 2026?

AI Agents in Testing: Smarter Automation Beyond Chatbots & Assistants

AI Agents in Testing: Smarter Automation Beyond Chatbots & Assistants

AI Agents in Testing: Smarter Automation Beyond Chatbots & Assistants

AI Automation in Testing: The Future of Smarter, Scalable QA

AI Automation in Testing: The Future of Smarter, Scalable QA